GDC 2024: Community Sift & the Future of Content Moderation

We're excited to share how Community Sift is creating healthy and safe communities for players with the use of innovative tools and solutions.

Let’s start with a true experience from an Xbox gamer.

Once upon a time, this player, like many of us, logged on to enjoy a game, have fun, and connect with friends. They joined a multiplayer match, still relatively new and far from being an expert, but they were there for the sheer joy of playing.

The gaming session started off like any other. They were having fun with their friends, not always winning, but nonetheless enjoying the experience. However, a few matches in, the atmosphere changed. They began receiving both verbal and written messages from other players criticizing their performance. These messages suggested that they were so bad that they were ruining the game for others. And it only escalated from there.

Our gamer soon found themselves receiving death threats, and messages like “Go kill yourself!”

We all can deal with a little trash talk, but this type of language? Absolutely not.

As you can imagine, receiving these messages was deeply hurtful--and honestly a bit scary. It stirred feelings of anger and fear. Their fun was replaced with negativity.

The very reason this person played--to have fun and feel a sense of community--was stripped away within minutes by some random people on the internet.

And the thing is, we know this story isn’t unique. Many of us have stories like this, where we have experienced harm while gaming online, including harassment, bullying, or even worse.

Community Sift is a content classification and moderation solution that’s powered by generative AI. We’re leading the way to ensure that safe and welcoming communities can exist online by removing harmful and inappropriate content. Historically, our ability to scale and to tailor policies based on specific community guidelines, for each game, in real-time, across every sort of harm, has been extremely difficult, costly, and time consuming.

Well, until today, that is.

We’re thrilled to share with you the ways that Community Sift is creating healthy and safe communities for players with the use of innovative tools and solutions that make it easier to foster the safe spaces that players expect and deserve.

We will highlight how AI-powered safety helps build trust with players, drive community engagement, and reduce churn by keeping players playing.

Why Content Moderation is Critical

Content Moderation is the process of overseeing user-generated content on digital platforms to ensure it follows guidelines and standards, removing inappropriate or harmful material as needed. Why is it beneficial to your community?

The first and obviously most important is that it ensures the safety of your players. Pro-active moderation means checking content in the split second before it’s seen by other players. This is the most effective way to safeguard players from harmful interactions, cyberbullying, and potential threats, and contributes to a safer online space that promotes player well-being.

Beyond that, removing toxic content—which includes hate speech, harassment, and inappropriate material—fosters a positive and respectful gaming environment. It not only ensures the safety of the player in the moment, it mitigates future toxic behavior on the platform, contributing to a safer online space and a better gaming experience for all players.

Beyond that, in the world at large, effective content moderation protects the reputation of your community and your title, attracting and retaining a diverse player base and preventing negative associations that could harm the game’s image. A healthy community keeps your game alive.

The Prevalence of Toxicity & Your Bottom Line

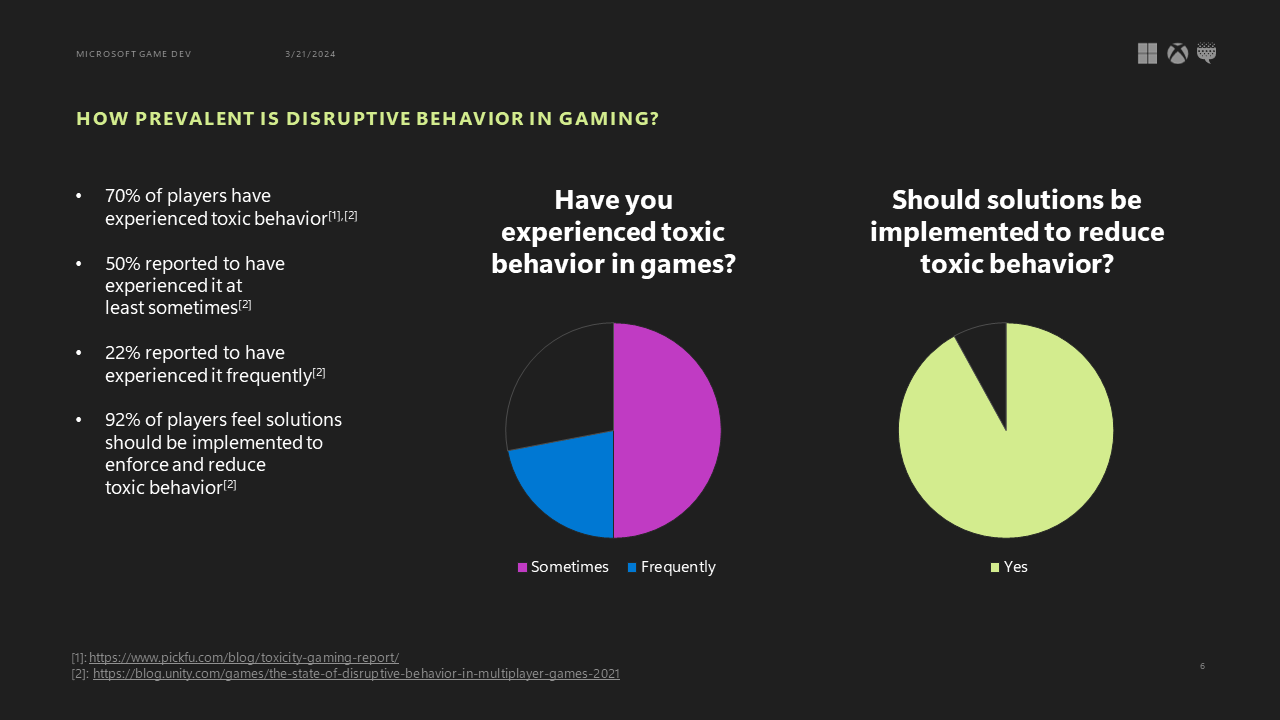

Unfortunately, toxic behavior is all too common. In a recent survey of gamers, over 70% of the players reported experiencing toxic behavior. Half of all players had experienced it at least sometimes, and 22% have experienced it frequently. 92% of players agreed that something should be done about toxicity.

The worse news is that it’s affecting your bottom line. In another survey about toxicity in games, players were asked about how they personally respond when faced with hate or harassment. Over 60% of players stated that they have decided against spending money in a game because of how they were treated. 60% have quit matches or the game altogether because hate or harassment. And almost half have chosen to avoid a game that they might otherwise have liked because of the player base’s reputation.

But what’s most glaring is the self-reported spend in this survey. The amount of money that players reported spending was almost 75% higher on games deemed non-toxic compared to games deemed toxic. This is self-reported spend in a survey as opposed to published game revenues, and we all know that what people say and what people do can be different. But even if you believe that this only speaks to intent, that’s nonetheless significant.

Hate, harassment, and illegal and unwelcome content directly affects your revenue and your costs in terms of paid user acquisition. It affects your bottom line.

Regulations on the Books

In recent years, we’ve seen a surge in new government regulations that aim to protect and support players. While this is a positive development, keeping up with them can be quite a challenge!

You’re probably familiar with GDPR. Then there is the DSA, the European Union’s Digital Services Act, and the OSA, the United Kingdom’s Online Safety Act. They have different scopes, focuses, and requirements, but they share a common goal—creating safer digital spaces.

For instance, according to the European Parliament, the DSA requires tech companies to:

- Set up a user report system.

- Manage player complaints against content moderation decision.

- Disclose information about your content moderation policies and tools.

- And detect illegal content, act, and escalate all relevant information to authorities.

Many companies will have to publish all of this in a transparency report at least once a year. We understand it’s tough. Xbox has been producing two transparency reports every year since 2022. And don’t forget about the upcoming Age-Appropriate Design Code Act created in California. Staying up to date with all these new and ever-changing policies and regulations is a legal requirement. But don’t worry—you’re not alone! Community Sift is here to help you navigate the ever-changing landscape of compliance and regulations.

The Myth of the “7 Bad Words”

You might be tempted to think that it’s easy enough to build a filter to catch toxic behavior. There’s what, 7 really bad words you don’t want people to say? George Carlin had a famous standup routine about this in the 1970’s, and while the exact words have changed slightly over time, the myth that “badness” is contained in the words themselves persists.

The idea that content can simply be filtered is old too. The name “Community Sift” was based on the idea of just sifting out bad text, but that was over 10 years ago. A lot has changed in the industry and the technology we employ, and our product evolved accordingly. Toxicity is not the same as vulgarity. It is much harder to find, more complex, and more nuanced.

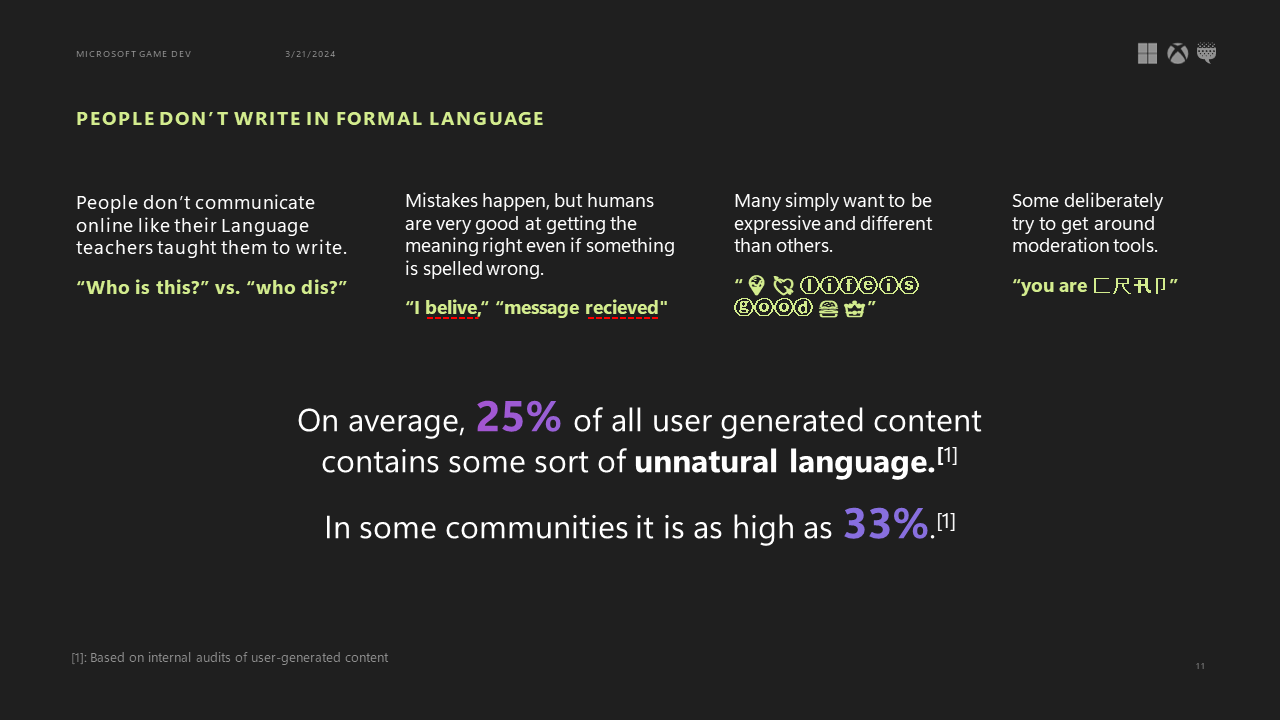

The first difficulty is that people don’t really communicate in perfect, formal language. People don’t chat in complete sentences, nor do they always use words found in the dictionary.

Misspellings are rampant and that’s okay because nobody cares how you spell things in chat. People are good at reading past mistakes and getting the meaning of the message. And that’s good and bad. It's good that your players can communicate, but misspelled harassment is just as toxic as correctly spelled harassment.

And then there’s other character sets entirely. People love to pepper their communications with emojis and decorative fonts to make their chats more expressive and different, or, sometimes, to deliberately thwart attempts at moderation. This sort of “unnatural language” trips up traditional rules-based logic, machine learning, and LLMs. It requires a sophisticated solution.

Once you’ve gotten past the point of understanding the message, you need to adjust that understanding and judgment based on where it was said. Different games can have very different communities with very different expectations of appropriate conduct. What flies just fine in Call of Duty may very well not be acceptable in a game like Minecraft. The moderation system needs to have the flexibility to adjust its judgements based on each particular community’s policies.

Language is also always everchanging. Pop culture and current events create new terms and give words meanings that they didn’t have before. And, of course, kids will be kids. Every generation claims the language as its own and comes up with new words, new meanings, and new phrases. Any solution to combat toxicity needs to be able to adapt to sudden changes in language.

Context also matters. Today, many content moderation systems just look at a single line of text—usually the single line that was reported or flagged. But have you even been in a room and just heard part of a conversation? Or has someone ever misunderstood something you’ve said? In these instances, how are you really supposed to understand what someone has said without knowing the full context?

Using generative AI, Community Sift can now examine the context before and after a reported text line and decide if—depending on the context—this content is harmful or toxic. Some phrases or words are inherently ambiguous without an understanding of the broader context. We also frequently see benign words reused for nefarious purposes. Community Sift can understand these sorts of nuances. It also continuously learns and is constantly adapting to the ever-changing languages your gamers use.

A Few Bad Apples

Toxicity begins with a few people—most studies suggest it’s 10% of people or fewer who are responsible for over 90% of the toxicity in a community. But toxicity causes more toxicity. One toxic message has a lasting effect and influence on other players’ behavior. Community Sift can find the players who are causing that toxic behavior, understand and scrutinize the frequency of their behavior, then take the right actions to keep the community safe.

Child grooming and radicalization are among what we refer to as “complex harms,” and they occur across all social platforms. Needless to say, we firmly believe that they should not be tolerated in any community.

Complex harms are difficult to detect. They happen over long periods of time, where a trusting relationship is built. When looked at in isolation, the chats where this is occurring are typically nondisruptive. They can even seem positive in many cases. In the case of complex harms, these positive conversations subtly ramp into negative.

In many grooming-specific cases, children often believe that they are in a real relationship with the predator. Since the predator is in a space where other children gather while pretending to be their age, the victim doesn’t understand they are being manipulated. According to the FBI, an estimated 89% of sexual advances directed at children occur through text in internet chatrooms or through instant messaging.

We believe we could leverage Community Sift to do something about it, so we went to work. Earlier this year, Community Sift created experimental tech powered by generative AI to see if we could outperform our existing tools and processes to better categorize conversations and identify behaviors indicative of grooming.

In the course of our ongoing experiments, the team has created highly accurate model detection processes and analysis techniques which will serve as the foundation of a solution that we’ll bring online in the future.

Currently, we are able to identify when a conversation starts to transition, turning from what appears to be a positive conversation to a negative one. This allows us to intervene before it crosses the line—not after. By leveraging generative AI, we can more easily and effectively analyze long-form text and ongoing conversations to identify behaviors that are indicative of these complex harms. This empowers us to perform the necessary human-based investigations to identify and act on these horrific incidents.

We are excited about our initial learnings in this area and are continuing to invest in ways to integrate these developments into Community Sift.

But it’s not all about the tech. Microsoft, Xbox, and Community Sift have been focused on identifying this sort of behavior for a very long time. We’ve established deep partnerships with the FBI, the National Center for Missing and Exploited Children, and the Crisis Text Line. And we’re happy to help you pursue these partnerships as well!

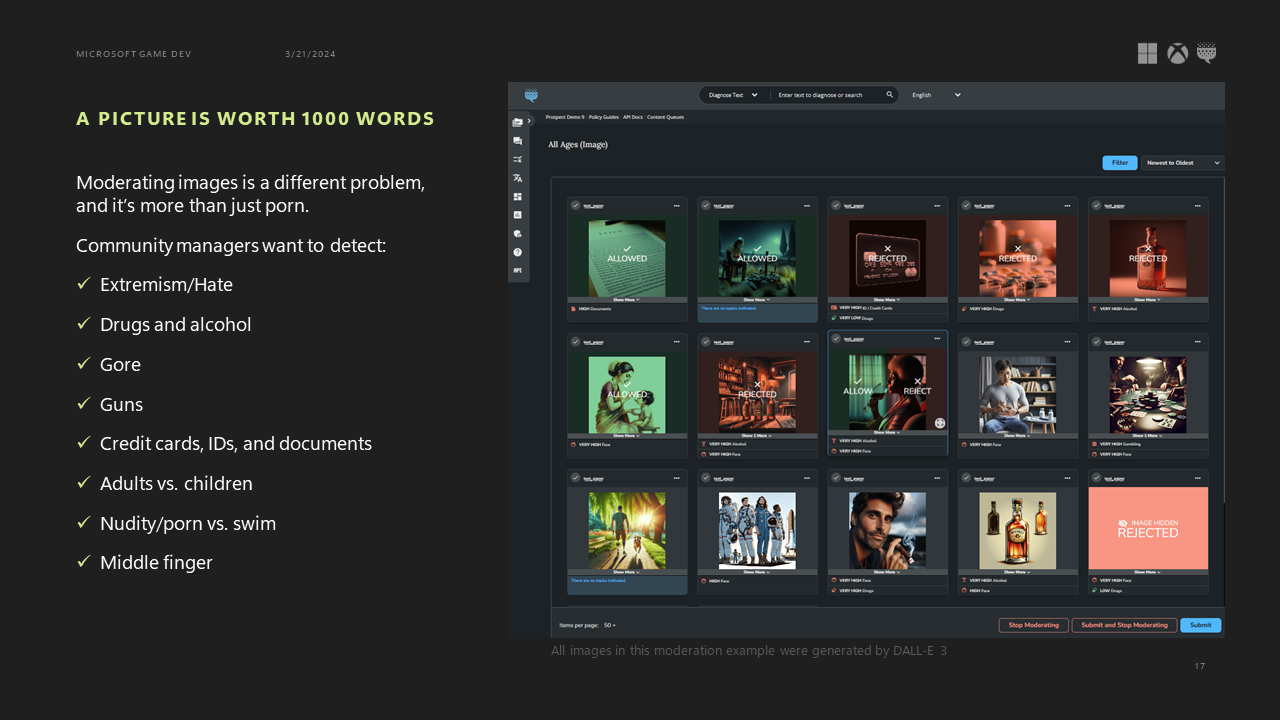

A Picture Is Worth 1000 Words

A lot of communities allow users to upload images for their avatars or to include in their messages, and to store screenshots from their games. But without rules and monitoring for what’s allowed, this can get problematic very quickly. Beyond porn—which makes up an estimated 10% of the internet and is probably the biggest category of concern for us—there are a wide spectrum of images that might not be acceptable for your community.

Xbox, for instance, has a policy where it will not allow images showing the middle finger in an obscene gesture to be used as a Gamerpic. But that same image could show up in some other places—it might be fine in private friend-to-friend communications, for example, depending on how those friends have defined their own preferences for viewable content.

These are among the common categories that companies frequently ask for our help in detecting, along with the flexibility to include or exclude a topic from the policy at will. For an adult community, you may not care about pictures of alcohol or gore. But you’re probably always going to care about pictures of credit cards. A child community might want to limit pictures of gambling or smoking. Overall, what’s required is flexibility to set rules and allow for exceptions where needed.

This is where AI shines. With text, you can get pretty far with relatively simple algorithms, rules, and dictionaries. Your protection won’t be as robust as what we can offer with Community Sift, but you can at least do something. But when it comes to images, you can’t even begin to do anything without leveraging AI. And when it comes to images that include text—like memes, but also photographs where written words occur organically, or snapshots of handwriting—we employ Optical Character Recognition (or OCR) to enable these same sorts of robust protections.

Now Do It for 22 Languages!

It’s important to consider scale when it comes to these efforts. 60% of the world is multi-lingual and it’s common for people to switch between multiple languages within the same conversation or within the same message, often to deliberately hide specific topics. Words that are fine in English may not be fine in other languages and vice versa.

All of this needs to happen in real time and at high volume. Our pro-active moderation, which provides the highest level of safety, occurs on every message before players see them. It happens between the time the player hits enter and when the text shows up in the chat. Realistically, you have about 50 milliseconds to make and communicate a decision, taking all of that into account. This happens hundreds or thousands of times per second. Peak requests for a popular title can hit 5000 requests per second or more.

And more volume is coming. The AI-generated content boom may be just around the corner. Some experts predict 99% of internet content will soon be AI generated. It isn’t a stretch to predict the misuse of AI chat bots by bad actors to scale up complex harms like radicalization and grooming, which are time consuming to mitigate. Instead of managing a few simultaneous online conversations to achieve the desired outcome, a chatbot can run hundreds. That means more volume could be soon coming your way.

Human Moderation – need, cost, and toll

With all this new technology, there is still a role and a need for humans in this process, but with the technology we can improve how and where we apply those human decision-making skills. The current job of human moderators is difficult. Combing through the worst that humanity has to offer can be one of the hardest jobs in the industry.

That said, humans are necessary for many reasons. The challenge we now face is prioritizing how we apply our human decision-making skills. Gray areas will always exist. AI can help us narrow that band of acceptable versus not-acceptable compared to our previous capabilities, but it would be hubris to believe we could get rid of ambiguity entirely.

Another reason is that reported content requires a different system. We refer to this as pro-active and reactive systems. As mentioned before, pro-active means scanning content before any user sees it. Meanwhile, the reactive approach involves examining content that has been reported by a user. If you do both and use the exact same processes and settings for both, you will get the same result the second time. At Xbox, our moderators find that more than 70% of reports show no policy violation. With the knowledge that 70% of reports are closed with no action, we can rely on AI to help us be a little more efficient, allowing moderators to focus on that gray area.

We should also expect that future laws are going to require humans to be in the loop. When content moderation systems act not just on content but on users, that can have real-world consequences. Escalating illegal content to law enforcement or other authorities is a big deal for which we can tolerate no margin of error. A full-cycle content moderation process still needs humans, but we should be able to make their jobs as efficient as possible, and at the same time more pleasant.

Community Sift’s Solution

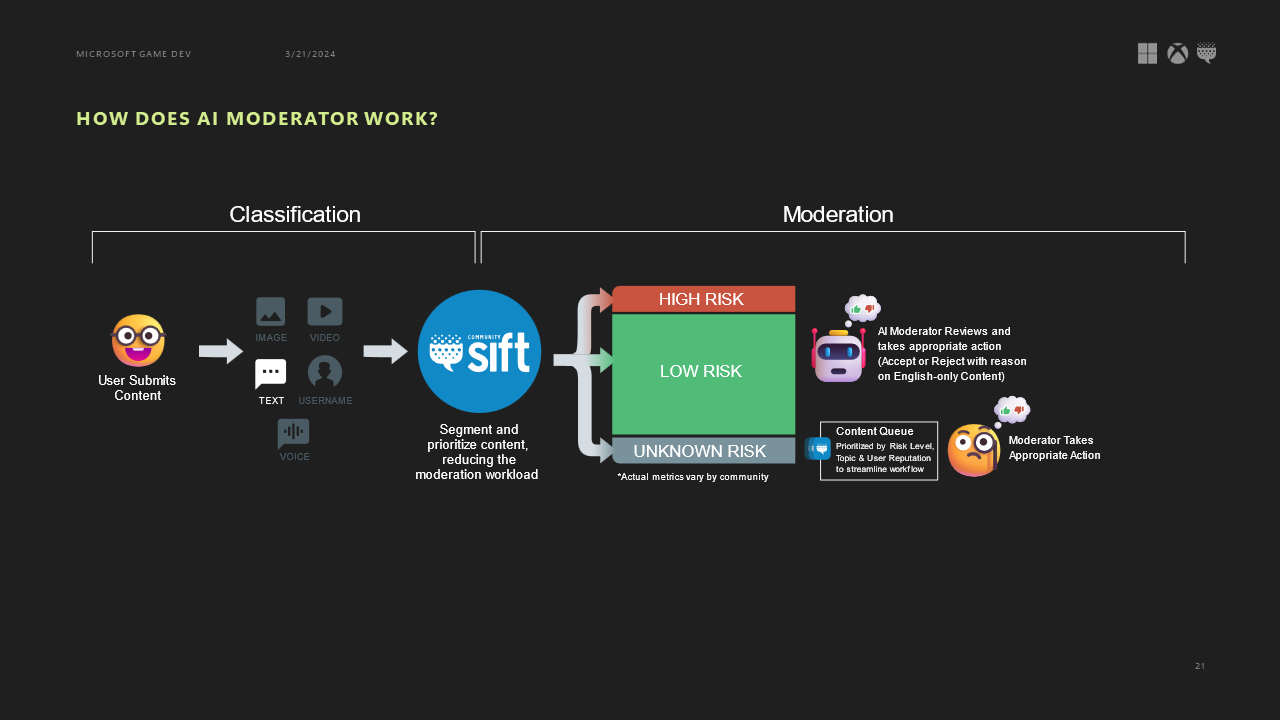

At Community Sift, we’ve taken nearly two decades of content moderation and safety experience and combined it with generative AI to scale content moderation while maintaining quality. One of the features we’ve released for Xbox and have available now for Sift customers through a Private Preview is called AI Moderator, or AI Mod for short. AI Mod will:

- Make decisions on content based on a customer’s specific policy.

- Reduce the amount of harmful content seen by human moderators.

- And enable our customers to scale.

By using AI Mod, our customers’ human moderators will be able to focus on the complex “gray area” content while the AI handles the stuff that is more obvious. In the past month, Community Sift’s AI Mod solution has reached over 98% accuracy on Xbox content.

Here’s how it works:

- First, the Community Sift team partners with you to craft a policy to fit your specific Terms of Use and Community Standards.

- Then, we help you tailor your policy to your unique needs and optimize the accuracy rating to 98% or higher.

- Next, the Community Sift team adds your AI Mod into your existing content moderation process.

- It will initially run in the background so you can analyze its decisions and build trust with AI Mod, like you might for a junior moderator you’re onboarding.

- Once you have established trust with AI Mod, the Community Sift team will turn it on and AI mod will begin to make content moderation decision based on your policy.

AI Mod will not only lower your operational costs and allow your human moderators to concentrate on complicated content, but it will also help you expand your content moderation impact, keeping more of your players safe, happy, and engaged.

Community Sift works for companies and games of all sizes. We’re happy to walk you through the process, from establishing community standards that work for you, to recommending best practices for community monitoring and moderation workflows, to setting up a sandbox environment and trying it out yourself. If you’re managing a community and want to ensure a safe, friendly, and toxicity free environment for your players, we’d love to hear from you!